The world of AI has grown much in the past decade.

Making AI apps is profitable in a world like this so here I am covering 25 open source projects that you can use to make your AI app and take it to the next level.

There are exciting concepts like interactive communication with 3D characters using voice synthesis. Stick to the end.

There will be plenty of resources, articles, project ideas, guides, and more to follow along.

Let’s cover it all!

1. Taipy – Data and AI algorithms into production-ready web applications.

Taipy is an open source Python library for easy, end-to-end application development, featuring what-if analyses, smart pipeline execution, built-in scheduling, and deployment tools.

I’m sure most of you don’t understand so Taipy is used for creating a GUI interface for Python-based applications and improving data flow management.

So, you can plot a graph of the data set and use a GUI-like slider to give the option to play with the data with other useful features.

While Streamlit is a popular tool, its performance can decline significantly when handling large datasets, making it impractical for production-level use.

Taipy, on the other hand, offers simplicity and ease of use without sacrificing performance. By trying Taipy, you’ll experience firsthand its user-friendly interface and efficient handling of data.

Under the hood, Taipy utilizes various libraries to streamline development and enhance functionality.

Get started with the following command.

pip install taipy

Let’s talk about the latest Taipy v3.1 release.

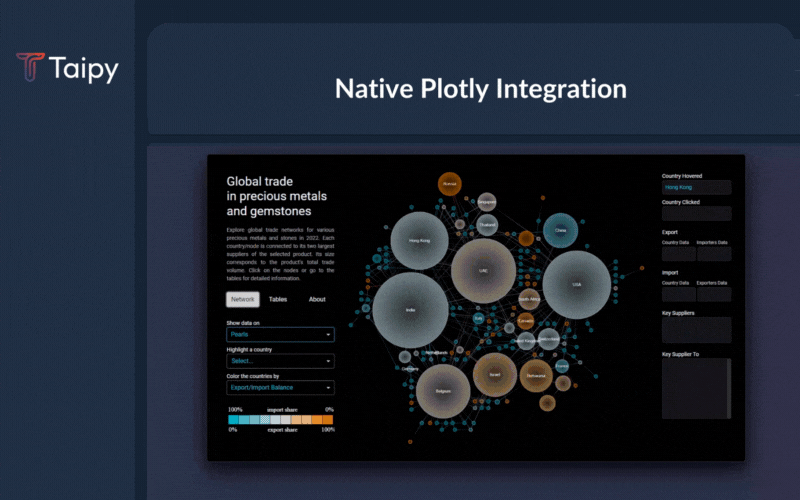

The latest release makes it possible to visualize any HTML or Python objects within Taipy’s versatile part object.

This means libraries like Folium, Bokeh, Vega-Altair, and Matplotlib are now available for visualizations.

This also brings native support for Plotly python, making it easier to plot charts.

They have also improved performance using distributed computing but the best part is Taipy and all its dependencies are now fully compatible with Python 3.12 so you can work with the most up-to-date tools and libraries while using Taipy for your projects.

You can read the docs.

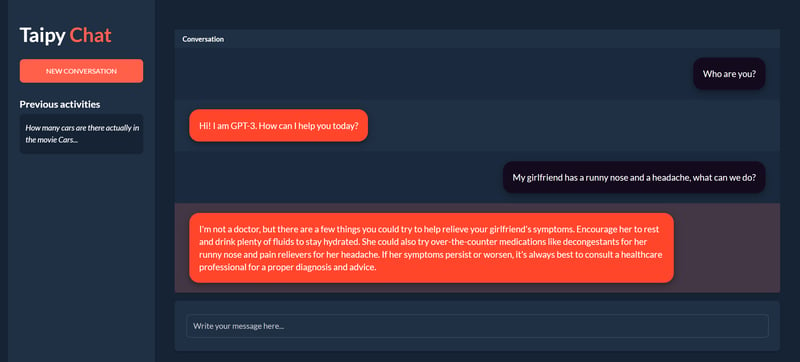

For instance, you can see the chat demo which uses OpenAI’s GPT-4 API to generate responses to your messages. You can easily change the code to use any other API or model.

Another useful thing is that the Taipy team has provided a VSCode extension called Taipy Studio to accelerate the building of Taipy applications.

You can also deploy your applications with the Taipy cloud.

If you want to read a blog to see codebase structure, you can read Create a Web Interface for your LLM in Python using Taipy by HuggingFace.

It is generally tough to try out new technologies, but Taipy has provided 10+ demo tutorials with code & proper docs for you to follow along.

For instance, some live demo examples:

Taipy has 7k+ Stars on GitHub and is on the v3 release so they are constantly improving.

2. Supabase – open source Firebase alternative.

To build an AI app, you need a backend, and Supabase serves as an excellent backend service provider to meet this need.

Get started with the following npm command (Next.js).

npx create-next-app -e with-supabase

This is how you can use CRUD operations.

import { createClient } from '@supabase/supabase-js'

// Initialize

const supabaseUrl = 'https://chat-room.supabase.co'

const supabaseKey = 'public-anon-key'

const supabase = createClient(supabaseUrl, supabaseKey)

// Create a new chat room

const newRoom = await supabase

.from('rooms')

.insert({ name: 'Supabase Fan Club', public: true })

// Get public rooms and their messages

const publicRooms = await supabase

.from('rooms')

.select(`

name,

messages ( text )

`)

.eq('public', true)

// Update multiple users

const updatedUsers = await supabase

.from('users')

.eq('account_type', 'paid')

.update({ highlight_color: 'gold' })

You can read the docs.

You can build a crazy fast application with Auth, realtime, Edge functions, storage, and many more. Supabase covers it all!

Supabase also provides several starting kits like Nextjs with LangChain, Stripe with Nextjs or AI Chatbot.

Supabase has 63k+ Stars on GitHub and plenty of contributors with 27k+ commits.

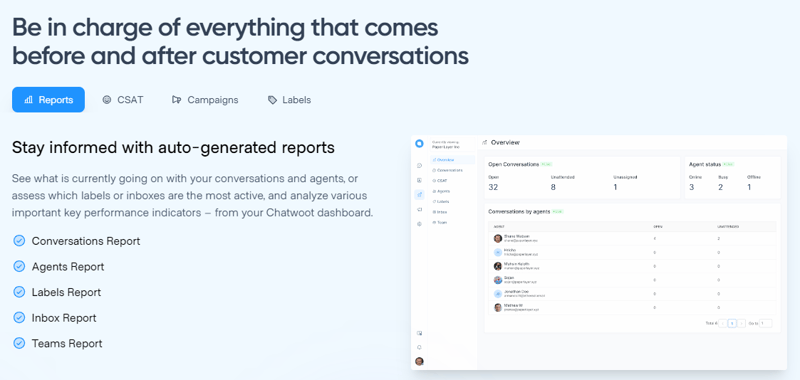

3. Chatwoot – live chat, email support, omni-channel desk & own your data.

Chatwoot connects with popular customer communication channels like Email, Website live-chat, Facebook, Twitter, WhatsApp, Instagram, Line, etc. This helps you deliver a consistent customer experience across channels – from a single dashboard.

This could be important in various cases, such as when you’re building a community around your AI app.

You can read the docs to discover a wide range of integration options so it’s easier to manage the whole ecosystem.

They have very detailed documentation along with snapshot examples in each integration such as WhatsApp channel with WhatsApp Cloud API. You can deploy to Heroku with 1-click or self-host as per needs.

They have 18k+ Stars on GitHub and are on the v3.6 release.

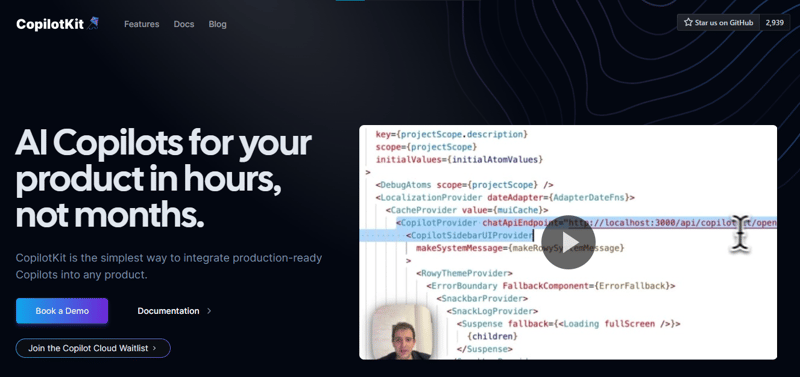

4. CopilotKit – AI Copilots for your product in hours.

You can integrate key AI features into React apps using two React components. They also provide built-in (fully-customizable) Copilot-native UX components like <CopilotKit />, <CopilotPopup />, <CopilotSidebar />, <CopilotTextarea />.

Get started with the following npm command.

npm i @copilotkit/react-core @copilotkit/react-ui @copilotkit/react-textarea

This is how you can integrate a CopilotTextArea.

import { CopilotTextarea } from "@copilotkit/react-textarea";

import { useState } from "react";

export function SomeReactComponent() {

const [text, setText] = useState("");

return (

<>

<CopilotTextarea

className="px-4 py-4"

value={text}

onValueChange={(value: string) => setText(value)}

placeholder="What are your plans for your vacation?"

autosuggestionsConfig={{

textareaPurpose: "Travel notes from the user's previous vacations. Likely written in a colloquial style, but adjust as needed.",

chatApiConfigs: {

suggestionsApiConfig: {

forwardedParams: {

max_tokens: 20,

stop: [".", "?", "!"],

},

},

},

}}

/>

</>

);

}

You can read the docs.

The basic idea is to build AI Chatbots in minutes that can be useful for LLM-based full-stack applications.

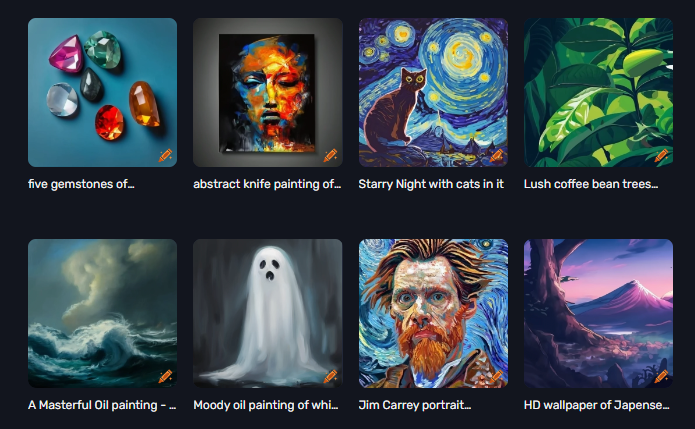

5. DALL·E Mini – Generate images from a text prompt.

OpenAI had the first impressive model for generating images with DALL·E.

Craiyon/DALL·E mini is an attempt to reproduce those outcomes using an open source model.

If you’re wondering about the name, DALL-E mini was renamed Craiyon at the request of the parent company and utilizes similar techniques in a more easily accessible web app format.

You can use the model on Craiyon.

Get started with the following command (for development).

pip install dalle-mini

You can read the docs.

You can read the DALL-E Mini explained to know more about dataset, architecture, and algorithms involved.

You can read Ultimate Guide to the Best Photorealistic AI Images & Prompts for better understanding with good resources.

DALL·E Mini has 14k+ Stars on GitHub, and it’s currently at the v0.1 release.

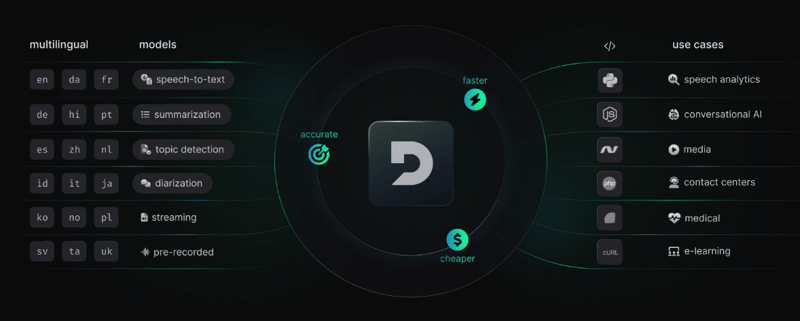

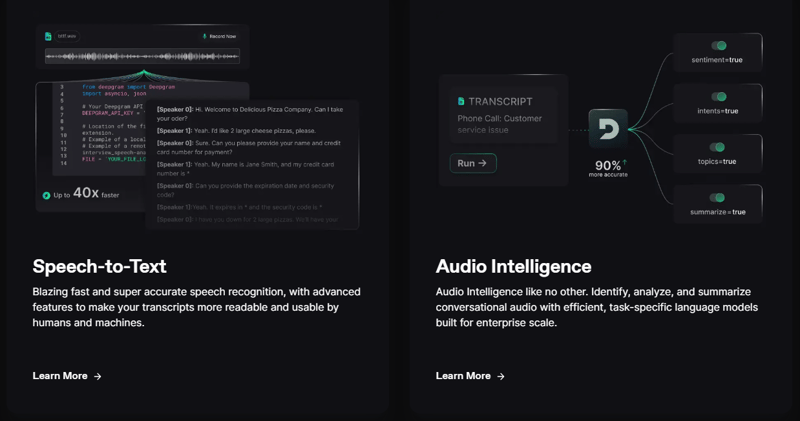

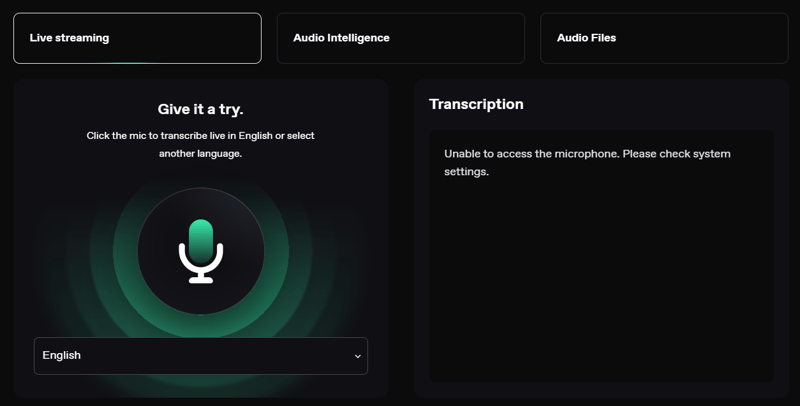

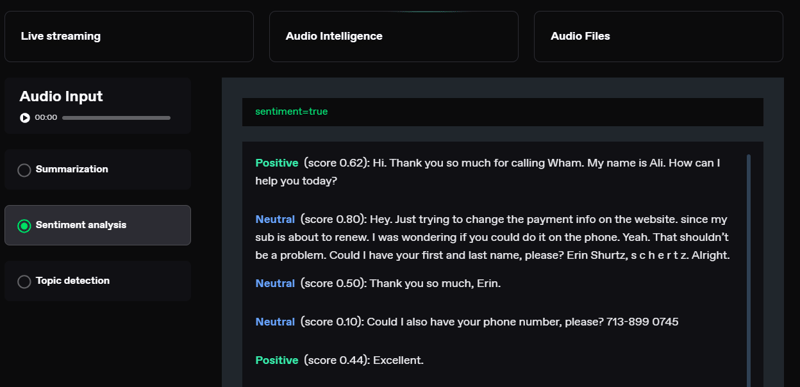

6. Deepgram – Build Voice AI into your apps.

From startups to NASA, Deepgram APIs are used to transcribe and understand millions of audio minutes every day. Fast, accurate, scalable, and cost-effective.

It provides speech-to-text and audio intelligence models for developers.

Even though they have a freemium model, the limits on the free tier are sufficient to get you started.

The visualization is next-level. You can check live streaming response, or audio files and compare the intelligence levels of audio.

You can read the docs.

You can also read a sample blog by Deepgram on How to Add Speech Recognition to Your React and Node.js Project.

If you want to try the APIs to see for yourself with flexibility in models, do check out their API Playground.

7. InvokeAI – leading creative engine for Stable Diffusion models.

About

InvokeAI is an implementation of Stable Diffusion, the open source text-to-image and image-to-image generator.

It runs on Windows, Mac, and Linux machines, and runs on GPU cards with as little as 4 GB of RAM.

The solution offers an industry-leading WebUI, supports terminal use through a CLI, and serves as the foundation for multiple commercial products.

You can read about installation and hardware requirements, How to install different Models, and most important Automatic Installation.

The exciting feature is the ability to generate an image using another image, as described in the docs.

InvokeAI has almost 21k stars on GitHub,

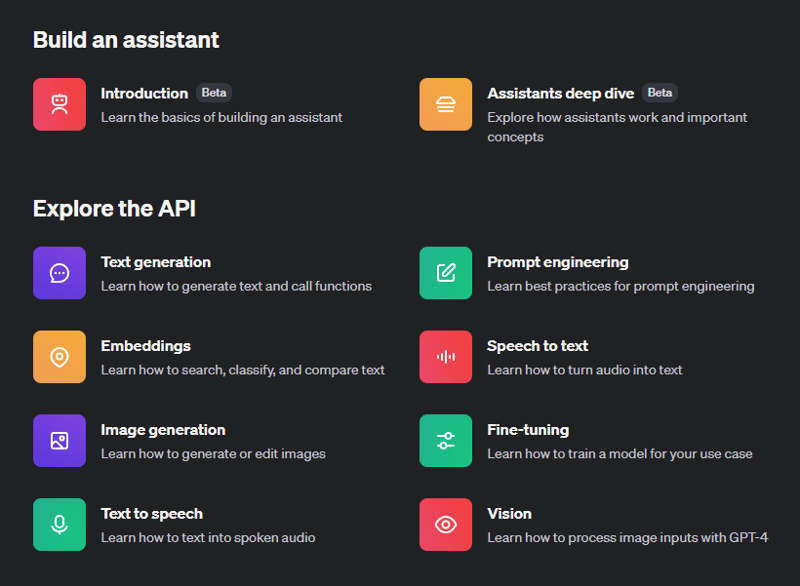

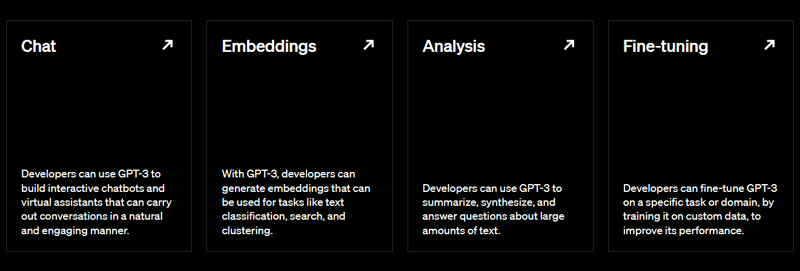

8. OpenAI – Everything you will ever need.

Gemini by Google and OpenAI are very popular, but we’re focusing on OpenAI for this list.

If you want to know more, you can read Google AI Gemini API in web using React 🤖 on Medium. It’s simple and to the point.

With OpenAI, you can use DALL·E (create original, realistic images and art from a text description), Whisper (speech recognition model), and GPT-4. Tell us about Sora in the comments!

You can start building with a simple API.

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What are some famous astronomical observatories?"}

]

)

You can read the docs. It provides such an insane amount of options to build something very cool!

Even Stripe uses GPT-4 to improve user experience.

For instance, you can create Assistant applications and see the API playground to understand it better.

In case you want a guide, you can read Integrating ChatGPT With ReactJS by Dzone.

Between, OpenAI acquired Sora to get the monopoly. What do you think?

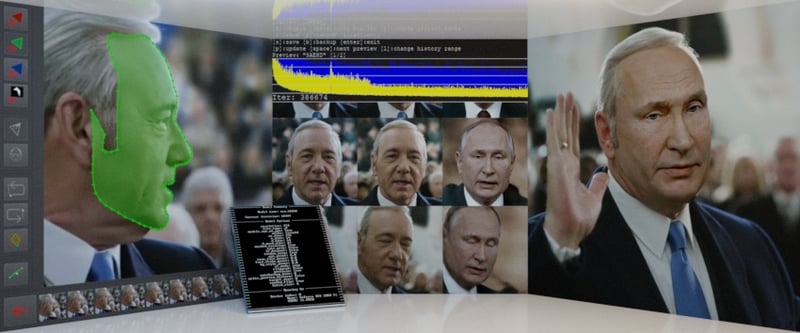

9. DeepFaceLab – leading software for creating deepfakes.

DeepFaceLab is the top open source tool for making deepfakes.

Deepfakes are altered images and videos made with deep learning. They’re often used to swap faces in pictures or clips, sometimes as a joke, but also for harmful reasons.

DeepFaceLab, built with Python is a strong deepfake tool. It can change faces in media and even erase wrinkles and signs of aging.

These are some of the things that you can do with DeepFaceLab.

- Replace the face.

- Replace the head.

- Manipulate the lips.

You can use this basic tutorial on how to use DeepFaceLab in an effective way to accomplish these things.

You can see the videos on YouTube that use this DeepLab algorithm.

Unfortunately, there is no “make everything ok” button in DeepFaceLab, but it’s worthwhile to learn its workflow for your specific needs.

Despite being archived on November 9, 2023, with nearly 44k+ stars on GitHub, it remains a solid choice for your AI app due to its numerous tutorials and reliable algorithms.

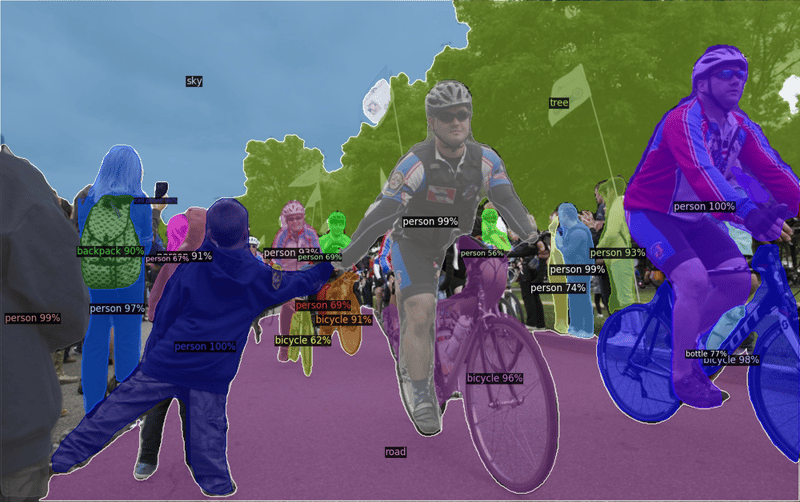

10. Detectron2 – a PyTorch-based modular object detection library.

Detectron2 is Facebook AI Research’s next-generation library that provides state-of-the-art detection and segmentation algorithms. It is the successor of Detectron and maskrcnn-benchmark.

It supports several computer vision research projects and production applications on Facebook.

Use this YouTube tutorial to use Detectron2 with Machine Learning from the Developer Advocate at Facebook.

Detectron2 is designed to support a diverse array of state-of-the-art object detection and segmentation models, while also adapting to the continuously evolving field of cutting-edge research.

You can read on How to Get Started, and the Meta Blog which covers the objectives of Detectron in deep.

The old version of Detectron used Caffe, making it hard to use with later changes in code that combined Caffe2 and PyTorch. Responding to community feedback, Facebook AI released Detectron2 as an updated, easier-to-use version.

Detectron2 comes with advanced algorithms for object detection like DensePose and panoptic feature pyramid networks.

Plus, Detectron2 can do semantic segmentation and panoptic segmentation, which help detect and segment objects more accurately in images and videos.

Detectron2 not only supports object detection using bounding boxes and instance segmentation masks but also can predict human poses, similar to Detectron.

They have 28k+ Stars on GitHub repo and are used by 1.6k+ developers on GitHub.

11. FastAI – deep learning library.

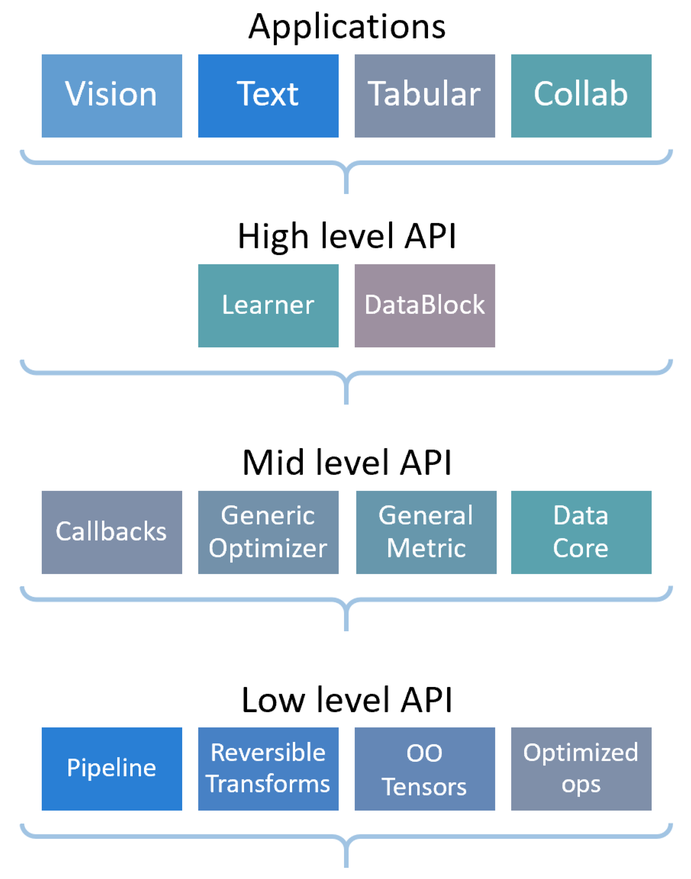

Fastai is a versatile deep-learning library designed to cater to both practitioners and researchers. It offers high-level components for practitioners to achieve top-notch results quickly in common deep-learning tasks.

At the same time, it provides low-level components for researchers to experiment and develop new approaches.

Detectron2 achieves a balance between ease of use and flexibility through its layered architecture.

This architecture breaks down complex deep-learning techniques into manageable abstractions, concisely using Python’s dynamic nature and PyTorch’s flexibility.

It is built on top of a hierarchy of lower-level APIs which provide composable building blocks. This way, a user wanting to rewrite part of the high-level API or add particular behavior to suit their needs does not have to learn how to use the lowest level.

Get started with the following command once you have installed pyTorch.

conda install -c fastai fastai

You can read the docs.

They have different starting points as tutorials for beginners, intermediate, and experts.

If you’re looking to contribute to FastAI, you should read their code style guide.

If you’re more into videos, you can watch Lesson “0”: Practical Deep Learning for Coders (fastai) on YouTube by Jeremy Howard.

They have 25k+ Stars on GitHub and are already used by 16k+ developers on GitHub.

12. Stable Diffusion – A latent text-to-image diffusion model.

What is stable diffusion?

Stable diffusion refers to a technique used in generative models, particularly in the context of text-to-image synthesis, where the process of transferring information from text descriptions to images is done gradually and smoothly.

In a latent text-to-image diffusion model, stable diffusion ensures that the information from the text descriptions is diffused or spread consistently throughout the latent space of the model. This diffusion process helps in generating high-quality and realistic images that align with the given textual input.

Stable diffusion mechanisms ensure that the model doesn’t experience sudden jumps or instabilities during the generation process. I hope this clears things up!

A simple way to download and sample Stable Diffusion is by using the diffusers library.

# make sure you're logged in with `huggingface-cli login`

from torch import autocast

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

use_auth_token=True

).to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

with autocast("cuda"):

image = pipe(prompt)["sample"][0]

image.save("astronaut_rides_horse.png")

You can read the research paper and more about Image Modification with Stable Diffusion.

For instance, this is the input.

and this is the output after upscaling a bit.

Stable Diffusion v1 is a specific model configuration that employs an 860M UNet and a CLIP ViT-L/14 text encoder for the diffusion model, with a downsampling-factor 8 autoencoder. The model was pre-trained on 256×256 images and subsequently fine-tuned on 512×512 images.

They have around 64k+ Stars on the GitHub repository.

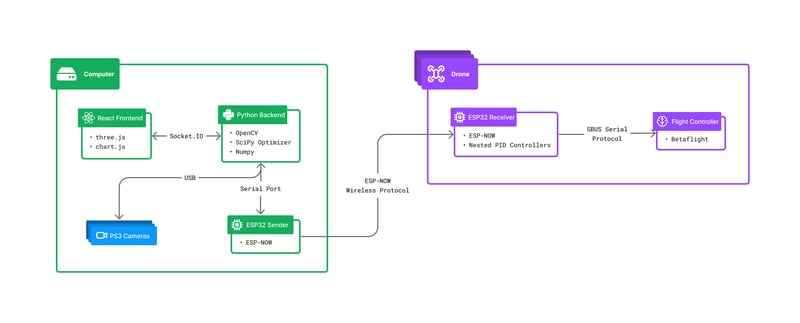

13. Mocap Drones – Low cost motion capture system for room scale tracking.

This project requires the SFM (structure from motion) OpenCV module, which requires you to compile OpenCV from the source.

From the computer_code directory, run this command to install node dependencies.

yarn install

yarn run dev // to start the web server.

You will get a URL view of the frontend interface.

Open a separate terminal window and run the command python3 api/index.py to initiate the backend server. This server is responsible for receiving camera streams and performing motion capture computations.

The architecture is as follows.

You can see this YouTube video to understand how Mocap drones work or the blog by the owner of the project.

You can read the docs.

It is a recent open source project with 900+ stars on GitHub Repository.

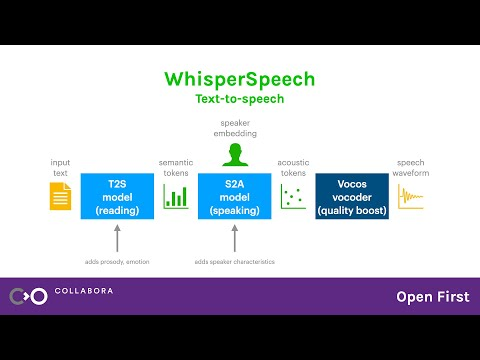

14. Whisper Speech – text-to-speech system built by inverting Whisper.

This model, similar to Stable Diffusion but for speech, is both powerful and highly customizable.

The team ensures the use of properly licensed speech recordings, and all code is open source, making the model safe for commercial applications.

Currently, the models are trained on the English LibreLight dataset.

You can study more about the architecture.

You can hear the sample voice and use colab to try out yourself.

They are fairly new and have around 3k+ stars on GitHub.

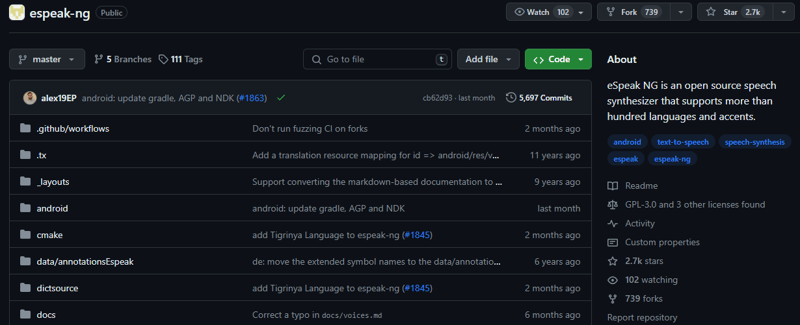

15. eSpeak NG – speech synthesizer that supports more than a hundred languages and accents.

The eSpeak NG is a compact open source software text-to-speech synthesizer for Linux, Windows, Android, and other operating systems. It supports more than 100 languages and accents. It is based on the eSpeak engine created by Jonathan Duddington.

You can read the installation guide on various systems.

For Debian-like distributions (e.g. Ubuntu, Mint, etc.). You can use this command.

sudo apt-get install espeak-ng

You can see the list of supported languages, read the docs and see features.

This model translates text into phoneme codes, suggesting its potential power as a front end for another speech synthesis engine.

They have 2.7k+ Stars on GitHub and

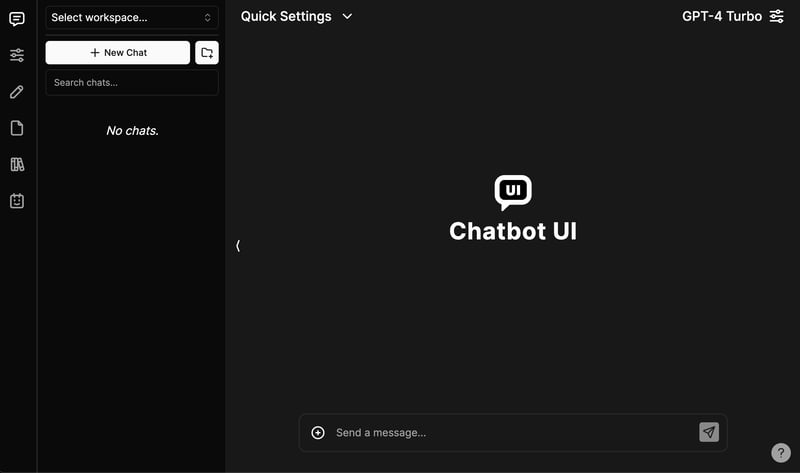

16. Chatbot UI – AI chat for every model.

We all have used ChatGPT, and this project can help us set up the user interface for any AI Chatbot. One less hassle!

You can read the installation guide to install docker, supabase CLI, and other stuff.

You can read the docs and see the demo.

This uses Supabase (Postgres) under the hood so that is why we discussed it before.

I didn’t discuss the Vercel AI chatbot because it’s a fairly new comparison to this one.

Chatbot UI has around 25k+ Stars on GitHub so it is still the first choice for developers to build a UI interface for any chatbot.

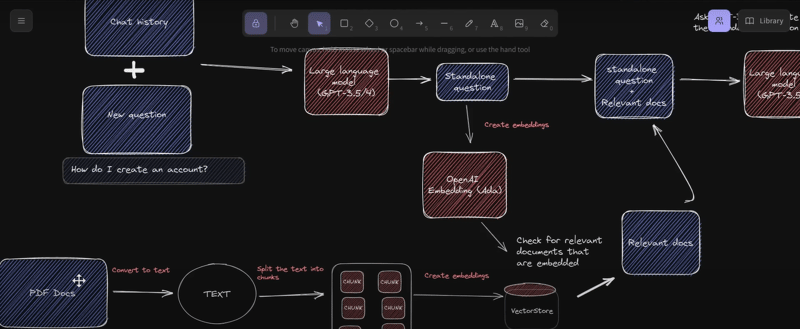

17. GPT-4 & LangChain – GPT4 & LangChain Chatbot for large PDF docs.

This can be used for the new GPT-4 API to build a chatGPT chatbot for multiple Large PDF files.

The system is built using LangChain, Pinecone, Typescript, OpenAI, and Next.js. LangChain is a framework that simplifies the development of scalable AI/LLM applications and chatbots. Pinecone serves as a vector store for storing embeddings and your PDFs in text format, enabling the retrieval of similar documents later on.

You can read the development guide that involved cloning, installing dependencies, and setting up environments API keys.

You can see the YouTube video on how to follow along and use this.

They have 14k+ Stars on GitHub with just 34 commits. Try it out in your next AI app!

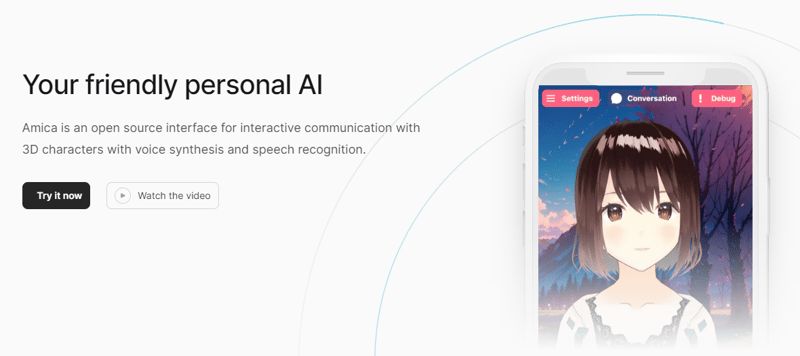

18. Amica – allows you to easily chat with 3D characters in your browser.

Amica is an open source interface for interactive communication with 3D characters with voice synthesis and speech recognition.

You can import VRM files, adjust the voice to fit the character, and generate response text that includes emotional expressions.

They use three.js, OpenAI, Whisper, Bakllava for vision, and many more. You can read on How Amica Works with the core concepts involved.

You can clone the repo and use this to get started.

npm i

npm run dev

You can read the docs and see the demo and it’s pretty damn awesome 😀

You can watch this short video on what it can do.

Amica uses Tauri to build the desktop application. Don’t worry, we have covered Tauri later in this list.

They have 400+ Stars on GitHub and it seems very easy to use.

19. Hugging Face Transformers – state-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

Hugging Face Transformers provides easy access to state-of-the-art pre-trained models and algorithms for tasks such as text classification, language generation, and question answering. The library is built on top of PyTorch and TensorFlow, allowing users to seamlessly integrate advanced NLP capabilities into their applications with minimal effort.

With a vast collection of pre-trained models and a supportive community, Hugging Face Transformers simplifies the development of NLP-based solutions.

These models can be deployed to perform text-related tasks like text classification, information extraction, question answering, summarization, translation, and text generation, in more than 100 languages.

They can also handle image-related tasks such as image classification, object detection, and segmentation, as well as audio-related tasks like speech recognition and audio classification.

They can also perform multitasking on various modalities, including table question answering, optical character recognition, information extraction from scanned documents, video classification, and visual question answering.

You can see plenty of models that are available.

You can explore the docs for complete objectives and samples showing you about the various tasks you can perform.

For example, one way to use the pipeline is for image segmentation.

from transformers import pipeline

segmenter = pipeline(task="image-segmentation")

preds = segmenter(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

)

preds = [{"score": round(pred["score"], 4), "label": pred["label"]} for pred in preds]

print(*preds, sep="\n")

Transformers are supported by the three most widely used deep learning libraries, namely Jax, PyTorch, and TensorFlow, with seamless integration among them. This integration enables easy training of models using one library, followed by loading them for inference with another library.

They have around 120k+ stars on GitHub and are used by a massive 142k+ developers. Try it out!

Star Hugging Face Transformers ⭐️

20. LLAMA – Inference code for LLaMA models.

Llama 2 is a cutting-edge technology developed by Facebook Research that empowers individuals, creators, researchers, and businesses of all sizes to experiment, innovate, and scale their ideas responsibly using large language models.

The latest release includes model weights and starting code for pre-trained and fine-tuned Llama language models ranging from 7B to 70B parameters.

Get started with the installation guide covering the below steps.

- Clone and download the repository.

- Install the required dependencies.

- Register and download the model/s from the Meta website.

- Run the provided script to download the models.

- Run the desired model locally using the provided commands.

You can see this YouTube video by ZeroToMastery on what a is llama.

You can also see the list of models and more info on Hugging Face and Meta official page.

Ollama is based on llama and this has 50k+ stars on GitHub. See the docs and use this model for more research.

21. Fonoster – The open source alternative to Twilio.

Fonoster Inc. researches an innovative Programmable Telecommunications Stack that will allow for an entirely cloud-based utility for businesses to connect telephony services with the Internet.

There are various ways to start based on what you want to achieve.

Get started with the following npm command.

npm install @fonoster/websdk

// CDN is also available

For instance, this is how you can use Fonoster with Google Speech APIs. (you will need the key for the service account)

npm install @fonoster/googleasr @fonoster/googletts

This is how you can configure the Voice Server to use plugins.

const { VoiceServer } = require("@fonoster/voice");

const GoogleTTS = require("@fonoster/googletts");

const GoogleASR = require("@fonoster/googleasr");

const voiceServer = new VoiceServer();

const speechConfig = { keyFilename: "./google.json" };

// Set the server to use the speech APIS

voiceServer.use(new GoogleTTS(speechConfig));

voiceServer.use(new GoogleASR(speechConfig));

voiceServer.listen(async(req, res) => {

console.log(req);

await res.answer();

// To use this verb you MUST have a TTS plugin

const speech = await res.gather();

await res.say("You said " + speech);

await res.hangup();

});

You can read the docs.

They provide a free tier which is good enough to get started.

They have around 6k+ stars on GitHub and have more than 250 releases.